WallFacer: Harnessing Multi-dimensional Ring Parallelism for Efficient Long Sequence Model Training

Jun 30, 2024· ,,,,,,,·

0 min read

,,,,,,,·

0 min read

Ziming Liu

Shaoyu Wang

Shenggan Cheng

Zhongkai Zhao

Kai Wang

Xuanlei Zhao

James Demmel

Yang You

WallFacer Attention Block

WallFacer Attention BlockAbstract

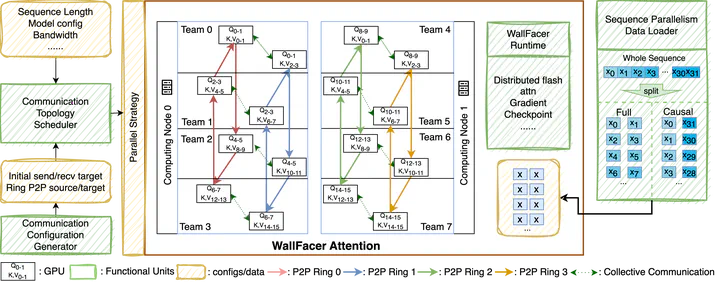

Training Transformer models on long sequences in a distributed setting poses significant challenges in terms of efficiency and scalability. Current methods are either constrained by the number of attention heads or excessive communication overheads. To address this problem, we propose WallFacer, a multi-dimensional distributed training system for long sequences, fostering an efficient communication paradigm and providing additional tuning flexibility for communication arrangements. Specifically, WallFacer introduces an extra parallel dimension to substantially reduce communication volume and avoid bandwidth bottlenecks. Through comprehensive experiments across diverse hardware environments and on both Natural Language Processing (NLP) and Computer Vision (CV) tasks, we demonstrate that our approach significantly surpasses state-of-the-art methods that support near-infinite sequence lengths, achieving performance improvements of up to 77.12% on GPT-style models and up to 114.33% on DiT (Diffusion Transformer) models.

Type

Publication

Arxiv Preprint