About Me

Hi, I am a third-year CS Ph.D. candidate at NUS, supervised by Prof. Yang You and work as a member of HPC-AI lab. I received my bachelor’s degree of computer science and engineering at Peking University in 2020, supervised by Prof. Tong Yang. I had the honor to receive the 2025 ByteDance Scholarship Award (20 among all CS PhDs in China and Singapore).

My research interests are machine learning systems and high performance computing. I have been working on various parallel techniques in large model training and inference, and I am also interested in the sparsity of deep learning models. I am looking forward to collaborations and research internship opportunities, so please feel free to contact me if you are interested in my research.

- Machine Learning System

- High Performance Computing

- Distributed Training & Inference

- Sparse Inference & Training

PhD Computer Science

National University of Singapore

Msc Artificial Intelligence

National University of Singapore

BSc Computer Science

Peking University

2025.11.21 Awarded the ByteDance Scholarship Award (20 among all CS PhDs in China and Singapore).

2025.11.11 One paper accepted by PPoPP '26.

2025.09.22 We release Expert-as-a-Service (EaaS) for MoE serving! Find the blog here.

2025.09.18 One paper accepted by NeurIPS '25.

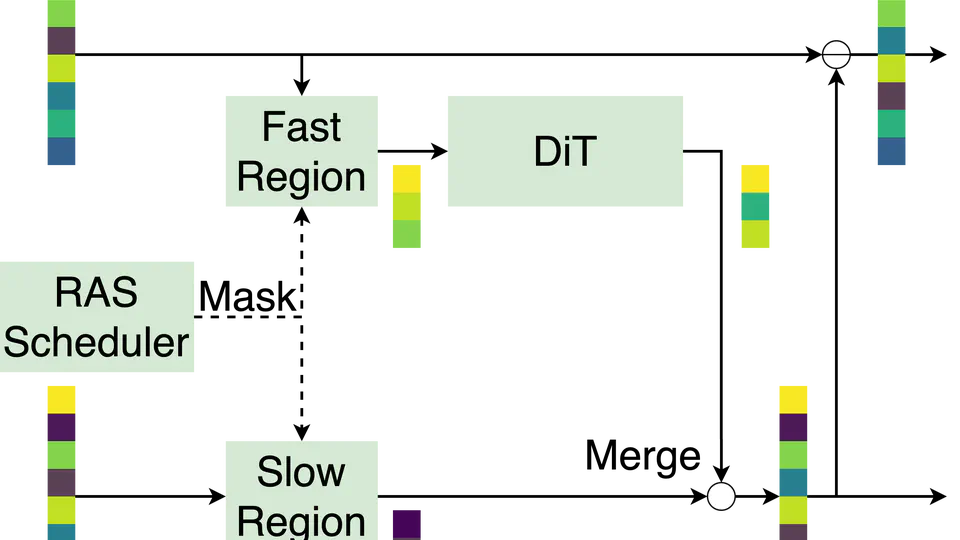

2024.12.09 Release RAS for efficient DiTs. Code & paper: aka.ms/ras-dit

2024.12.09 Awarded the "Stars of Tomorrow" Certificate from MSRA (top 10% intern).

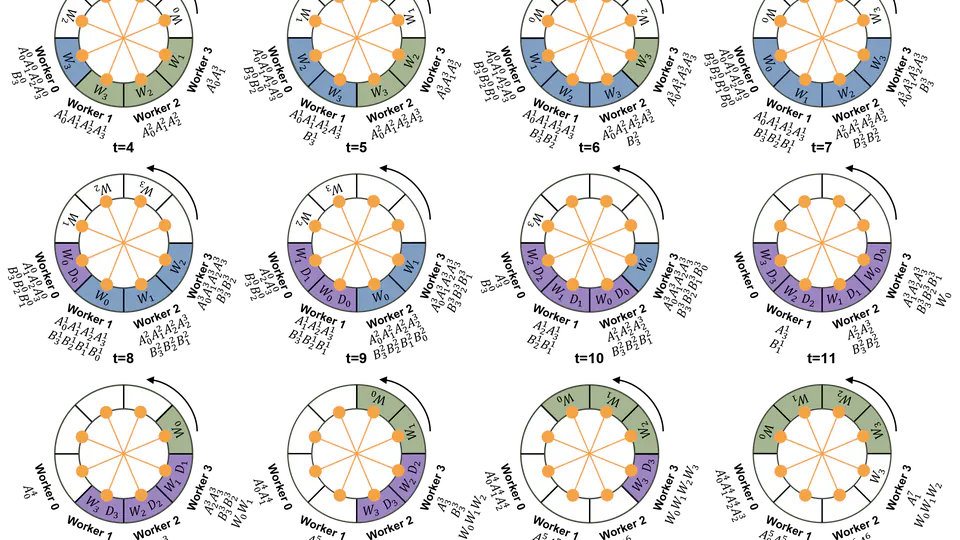

2024.11.12 One paper accepted by PPoPP 2025.

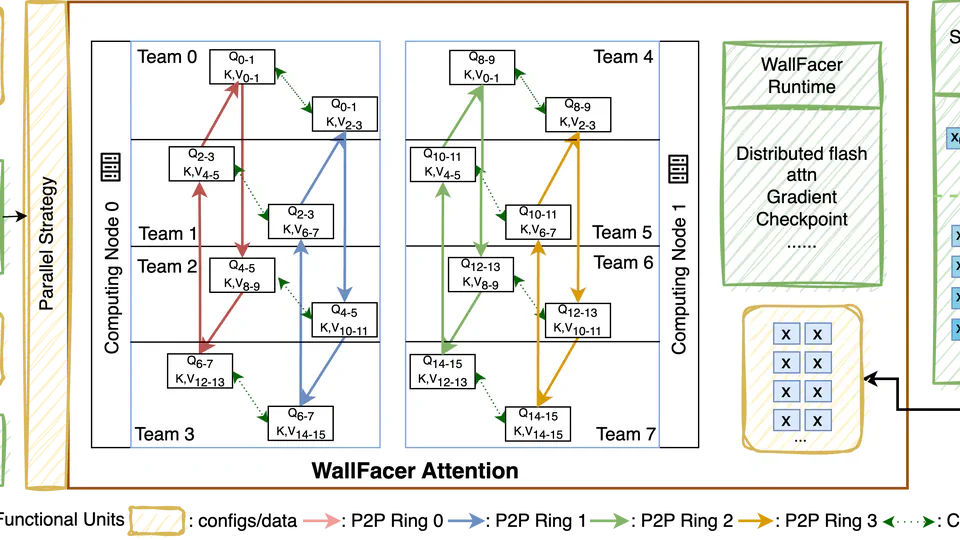

2024.10.03 One paper accepted by ASPLOS '25.

2024.06.27 Awarded the SoC Teaching Fellowship (3 out of all NUS CS PhD).

2024.02.16 One paper accepted by MLSYS 2024.

2024.01.15 One paper accepted by ICLR '24.

2023.06.17 One paper accepted by SC '23.

2023.01.07 Started my PhD career.

Experience

Research Intern

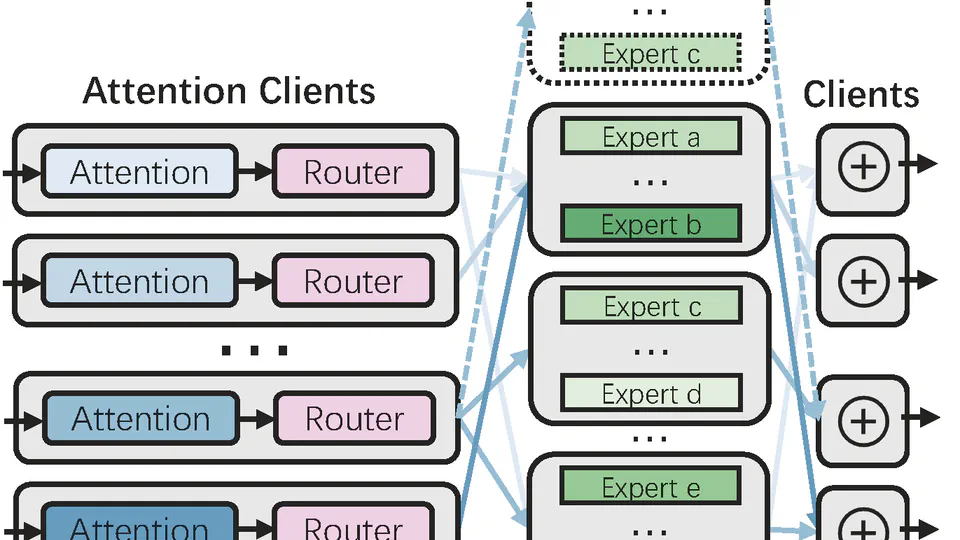

Qiji ZhifengWorking on large scale MoE model serving system. Paper just released!Research Intern

Microsoft ResearchWorking on sparse inference and training of text-to-image and text-to-video models. Supervised by Dr. Zhenhua Han and Dr. Yuqing Yang. Awarded the “Stars of Tomorrow” Certificate from MSRA (top 10% intern).Research Intern

HPC-AI TechResponsibilities include:

- Developing the efficient LLM inference system EnergonAI.

- Optimizing the implementation of ColossalAI.

Machine Learning Engineer

ByteDanceNLP algorithm engineer at Lark, ByteDance.

Education

PhD Computer Science

National University of SingaporeWorking on Machine Learning System, supervised by Presidential Young Professor Yang You.Msc Artificial Intelligence

National University of SingaporeBSc Computer Science

Peking UniversityBacholor’s degree of computer science and engineering. Supervised by Prof. Tong Yang.